Efficient Privacy-Preserving ML Using TVM

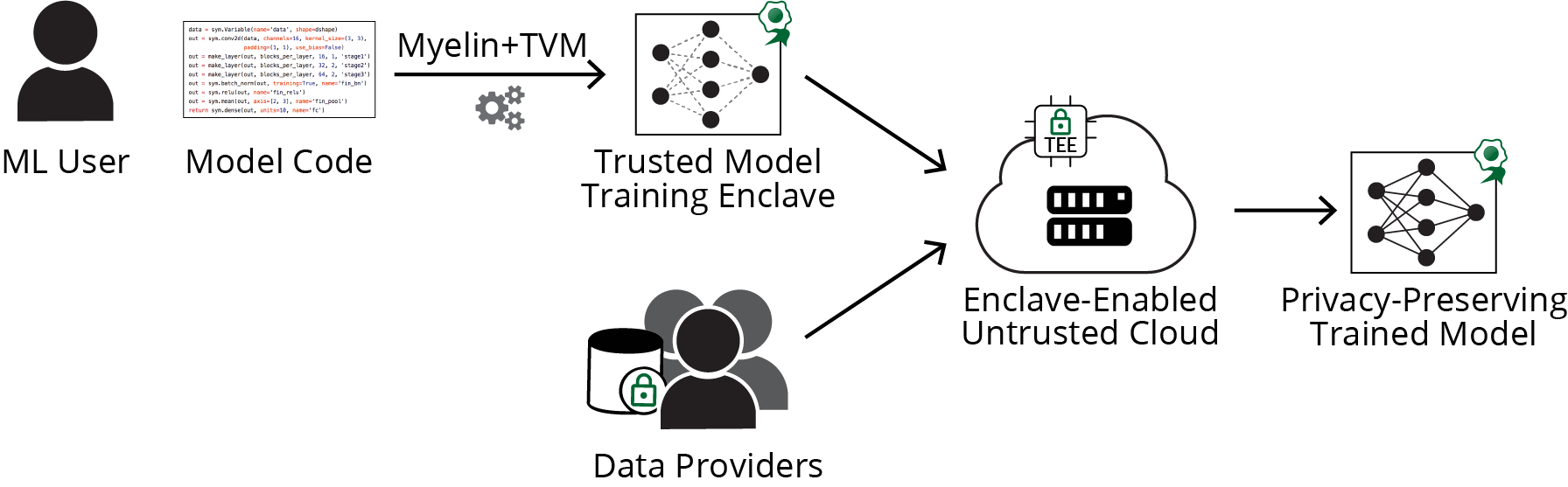

This post describes Myelin, a framework for privacy-preserving machine learning in trusted hardware enclaves, and how TVM makes Myelin fast. The key idea is that TVM, unlike other popular ML frameworks, compiles models into lightweight, optimized, and dependency-free libraries which can fit into resource constrained enclaves.

Give creating a privacy-preserving ML model a try! Check out the example code available in the TVM repo.

Motivation: Privacy-Preserving ML

Machine learning models benefit from large and diverse datasets. Unfortunately, using such datasets often requires trusting a centralized data aggregator or computation provider. For sensitive applications like healthcare and finance this is undesirable as it could compromise patient privacy or divulge trade secrets. Recent advances in secure and privacy-preserving computation, including trusted execution environments and differential privacy, offer a way for mutually distrusting parties to efficiently train a machine learning model without compromising the training data. We use TVM to make privacy-preserving ML framework fast.

Use Cases

- private MLaaS: a cloud provider runs their architecture on your data. You get the model outputs, your data stays private, and the cloud provider knows that you can’t steal model.

- trustworthy ML competitions: you train a model on contest data. The contest organizer sends private test data to your model and gets verifiable report of accuracy. Your model stays safe until the organizer decides to purchase it. Other participants can‘t cheat by training on test data.

- training on shared private data: you (a researcher) want to train a model on several hospitals’ data. Directly sharing is too complicated. Instead, have a “trusted third party” train a privacy-preserving model.

- ML on the Blockchain

Background

Trusted Execution Environments

A trusted execution environment (TEE) essentially allows a remote user to provably run code on another person’s machine without revealing the computation to the hardware provider.

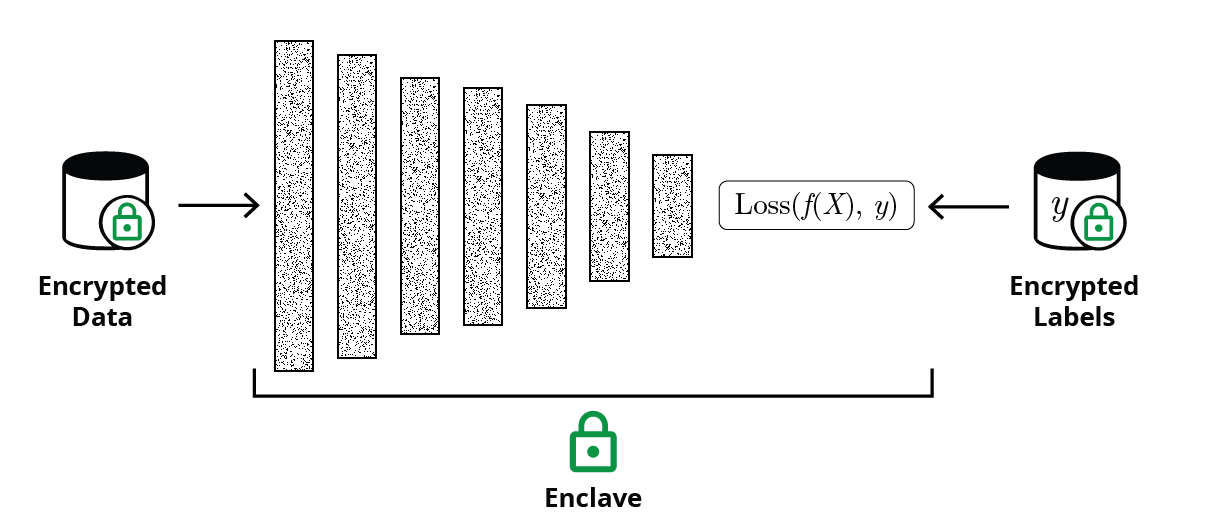

More technically, the TEE provides a secure enclave of isolated/encrypted memory and CPU registers; also a trusted source of randomness. The TEE can also send a signed attestation of the code that’s loaded so that the remote user can verify that the enclave has been correctly loaded. This process, known as remote attestation, can be used to establish a secure communication channel into the enclave . The remote user can then provision it with secrets like private keys, model parameters, and training data.

Compared to pure crypto methods like secure multi-parity computation (MPC) and fully-homomorphic encryption (FHE), TEEs are several orders of magnitude faster and support general-purpose computation (i.e. not just arithmetic operations). Perhaps the only drawbacks are the additional trust assumptions in the hardware root of trust (a key burned into the processor) and loaded software.

Trust assumptions notwithstanding, TEE technology is becoming increasingly widespread and is playing a major role in practical privacy-preservation. In fact, general-purpose TEEs already exist in commodity hardware like Intel SGX and ARM TrustZone. Additionally, the fully-open source Keystone enclave is on the way.

Differential Privacy

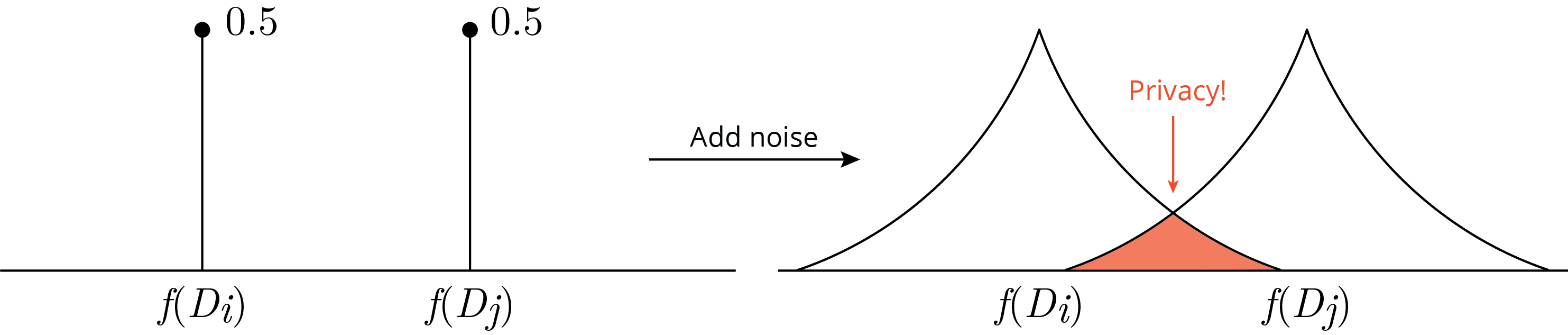

Differential privacy (DP) provides a formal guarantee that models trained on similar datasets are indistinguishable Informally, a user’s privacy is not compromised by choosing to contribute data to a model.

In other words, given the output of an algorithm on two datasets which differ in only a single record, differential privacy upper bounds the probability that an adversary can determine which dataset. An algorithm may be made DP using a mechanism which adds noise to the algorithm’s output. The amount of noise is calibrated on how much the output depends on any particular inputs. If you’re familiar with hypothesis testing, if outcomes A and B each have probability 0.5, applying a DP mechanism is like convolving with a probability distribution: the privacy is in the false positive and false negative rates. Since deep learning models tend to generalize well, the amount of noise is often less than might be expected.

Running a DP training algorithm in a TEE ensures that the DP mechanism is faithfully applied.

Efficient Privacy-Preserving ML Using TVM

One of the primary challenges of working with a TEE is that the code running within does not have access to the untrusted OS. This means that the trusted software cannot create threads or perform I.O Practically speaking, the result is that numerical libraries like OpenBLAS–much less frameworks like PyTorch and TensorFlow–cannot run directly in enclaves.

TEEs actually have a similar programming model to resource-constrained hardware accelerators. This is exactly what TVM is made for! In the privacy workflow, a user first defines an entire training graph in the high-level graph specification language. TVM them compiles the model and outputs a static library containing optimized numerical kernels which can easily be loaded into a TEE. Since the kernels are automatically generated and have strict bounds checking, they expose a low surface area of attack. They are supported by a lightweight memory-safe Rust runtime which also may easily be reviewed for safety and correctness.

Of course, safety is most useful when practically applicable. Fortunately, TVM modules in enclaves have comparable performance to native CPU-based training. By coordinating threads using the untrusted runtime, a single TVM enclave can fully utilize the resources of its host machine. Moreover, it’s not difficult to imagine a secure parameter server which orchestrates entire datacenters of enclave-enabled machines.

TVM also provides opportunities for more subtle optimization of privacy-preserving algorithms. Indeed, its fine-grained scheduling features allow speedups when using differential privacy. For instance, the tightest DP bounds may be obtained from clipping the gradients of each training example and adding noise to each [1]. In autograd frameworks, this requires forwarding the model for each example in the minibatch (though only one backward pass is needed) [2]. Using TVM, however, per-example gradient clipping is straightforward: instead of scheduling each weight update as a single reduction over both batch and feature dimensions, the reduction is split into two. The reduction over features is followed by clipping and noising, and then the final result is finally summed over examples to obtain the weight update. Thus, TVM allows applying differential privacy without introducing overhead greater than what is required by the technique. Also, if one really wants to get really fancy, it’s possible to fuse the clipping and noising operations and apply them in-place to further trim down latency and memory usage.

For benchmarks on realistic workloads, please refer to the tech report Efficient Deep Learning on Multi-Source Private Data. And, of course, feel free go give the framework a spin in the TVM SGX example.

Conclusion

The next generation of learning systems will be ushered in by privacy. As TEE technology becomes better understood and more widely available, it makes sense to leverage it as a resource for privacy-preserving machine learning and analytics. TVM is well poised to facilitate development of this use case in both research and deployment.

Bio & Acknowledgement

Nick is a PhD student in Prof. Dawn Song’s lab at UC Berkeley. His research interest is in the general domain of ML on shared private data, but this is really just an excuse to mess with Rust, security monitors, hardware enclaves, and compilers like TVM.

Thanks to Tianqi Chen for the code reviews!

References

[1] Deep Learning with Differential Privacy

[2] Efficient Per-Example Gradient Computations